This post will look at how VMware prioritises Environmental, Social and Governance (ESG), and how it supports and accelerates its global customer base with their own sustainability initiatives. The focus will be on environmental sustainability in healthcare, specifically the UK National Health Service (NHS).

In October 2020, the NHS was the first health system globally to commit to delivering net zero, acknowledging that climate change and human health are inextricably linked. Sustainability ambitions for the NHS were previously laid out in the NHS Long Term Plan, and net zero was legislated through the Health and Care Act 2022 and the subsequent forming of Integrated Care Boards (ICBs).

The Delivering a Net Zero National Health Service Report is issued at statutory guidance for NHS trusts and ICBs. The report outlines 2 targets for the NHS carbon footprint. Firstly, for emissions the NHS controls directly, these will be reduced to net zero by 2040, with an 80% reduction achieved by 2028-2032. Secondly, for emissions the NHS can influence, these will be reduced to net zero by 2045, with an 80% reduction by 2036-2039. These targets are crucial to the Climate Change Act 2008, since the NHS makes up around 4% of the country’s carbon emissions. According to NHS England, an overwhelming 87% of NHS staff support the NHS Net Zero ambition, while 92% of the general public believe it is important for the NHS to work in a more sustainable way.

What does VMware do for sustainability?

VMware’s 2030 Agenda is a decade-long Environmental, Social and Governance (ESG) commitment to build a more sustainable and equitable future. VMware has been innovating for 25-years and, through the introduction of data centre virtualisation and consolidation, has already had an immeasurable impact on the efficiencies of global infrastructure and emissions.

The VMware Environmental Social and Governance Report 2023 sets out VMware’s sustainability strategy, focused on visibility (data), efficiency (resources), and renewables (energy). VMware have been certified as a carbon neutral company continuously since 2018, and achieved 100% renewably sourced power for global facilities and co-located data centres continuously since 2019. Some of the key goals outlined in the VMware 2030 Agenda are listed below.

- Achieve net zero carbon emissions for operations and supply chain, and reduce emissions 50% from FY19 baseline, by 2030.

- Collaborate with enterprise public cloud partners to catalyse the transition to zero carbon clouds through the adoption of 100% renewable energy. At the time of writing 74 cloud providers have partnered with VMware for a sustainable future, including the likes of Microsoft Azure, Google Cloud, IBM Cloud, Oracle, Equinix, and others.

- Collaborate with global governments as a sustainable cloud advocate, to drive policy making IT infrastructure more reliable, scalable, flexible, secure, cost-effective and sustainable.

- Empower customers by enabling transparency into the carbon reduction impact of VMware solutions (more on this below).

VMware’s approach to sustainability goes far beyond decarbonising operations, it is also inherent to their technologies and innovation. VMware’s virtualisation solutions help reduce customers hardware and power consumption, improving efficiencies in data centre operations and management across compute, storage, network and security.

Furthermore, VMware holds itself, partners, and suppliers accountable for sustainability targets. At VMware Explore Europe 2023, mitigating the carbon footprint of an event with a 9000+ strong attendance was a top priority; using responsible materials, a green venue, and innovative climate partnerships. VMware has a strong history in foundation and community support, it comes as no surprise that when mitigating residual emissions, projects are selected that have the potential for systemic impact in communities. These carbon avoidance and removal projects include things like solar water heating, clean water and cooking, mangrove and forest restorations, wind power, and more.

VMware reports its climate strategy, targets, emissions, risks and opportunities in detail through an annual disclosure with the Carbon Disclosure Project (CDP). The reporting aligns to the recommendations provided by the Task Force on Climate-related Financial Disclosures (TCFD). In FY23 VMware was recognised on the CDP Climate A list for their environmental transparency and performance on climate change preparedness. You can see VMware’s full TCFD disclosure, in the VMware Environmental Social and Governance Report 2023.

How does VMware help customers achieve their sustainability targets?

There are multiple ways in which VMware solutions help customers with their sustainability initiatives. This is not an exhaustive list, but rather the most common conversations I have with customers. The solutions are aligned to healthcare messaging but are applicable cross-industry at a high level, so you can apply your own use cases. If you’re interested in knowing more about this topic you can catch up on the Sustainability for Techies – Why You Should Care session recording from VMware Explore.

- A digital, low-carbon transformation:

- Ensure data centres and companies providing these services minimise their environmental impact and support the drive to reach net zero

- Front-line digitisation of clinical records, clinical and operational workflow and communications, aided by digital messaging and electronic health and care record systems

- Digitising the estate and smart hospitals; ensuring large-scale migration of trust data centres into the hyper-scale cloud; and reducing the need for the storage of large volumes of data

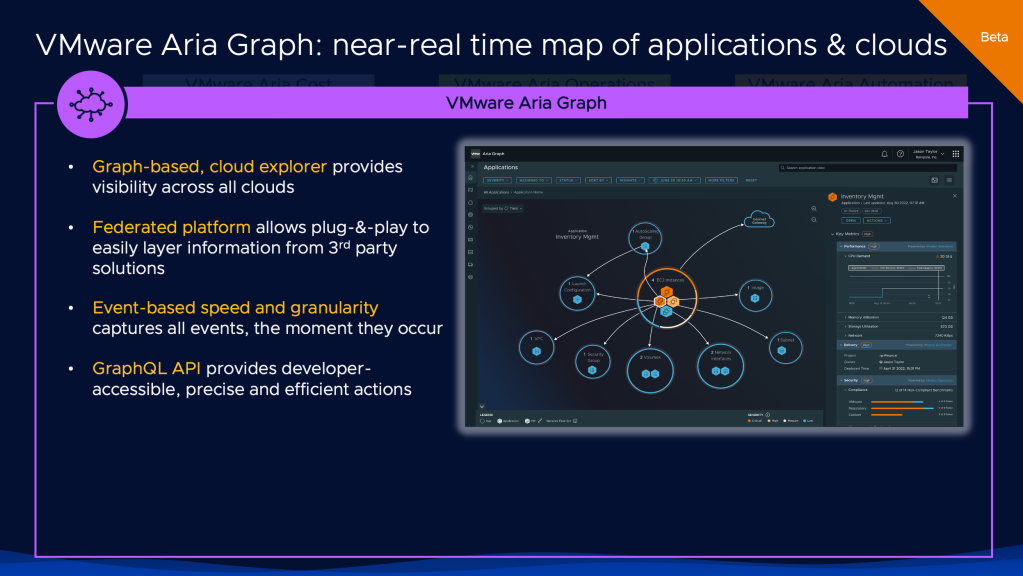

VMware customers can use Aria Operations (formerly vRealize Operations) to directly gather and track information on the environmental sustainability of on-premises infrastructure.

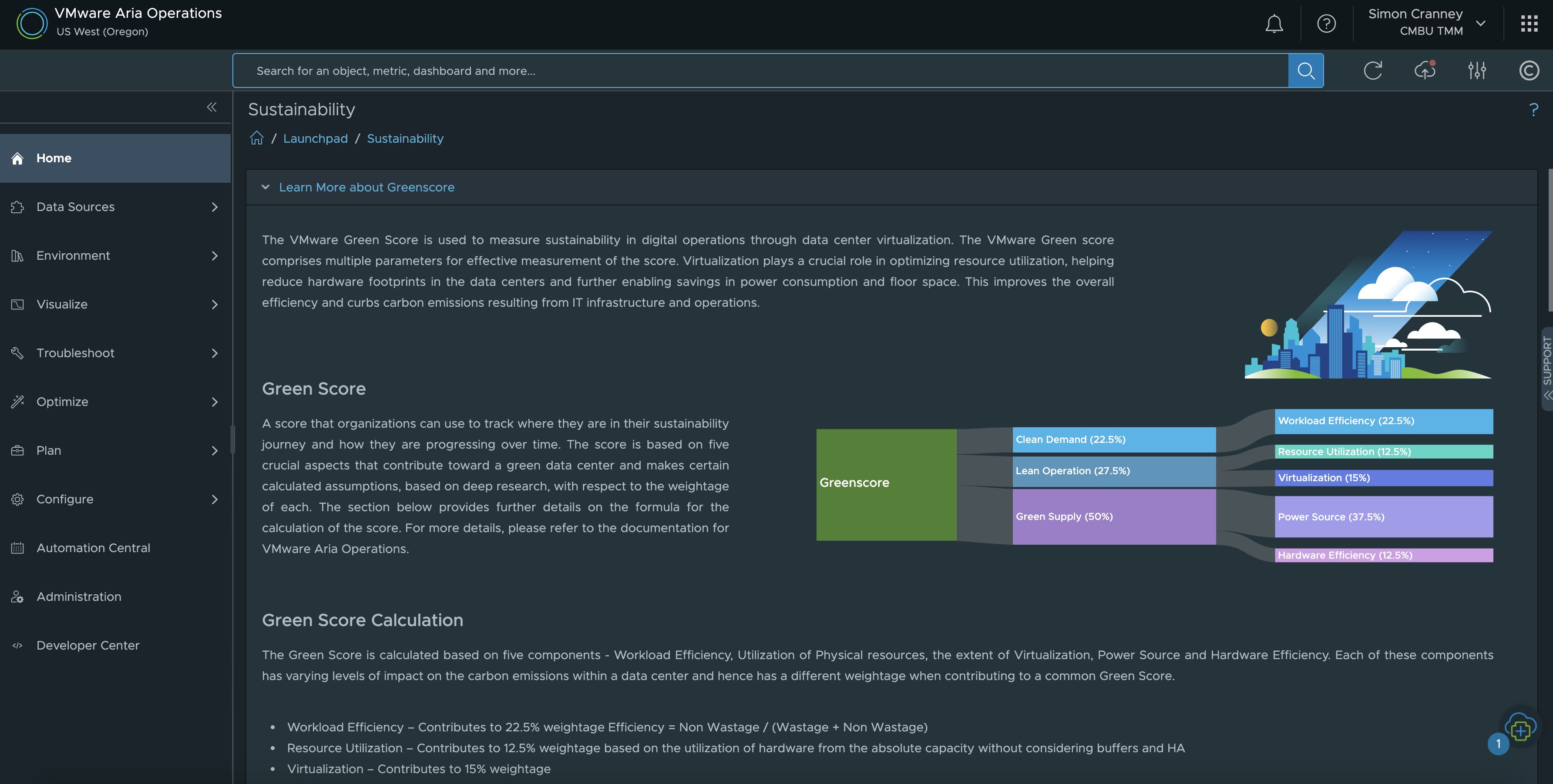

The VMware Aria Operations Green Score feature provides recommendations for customers to optimise energy usage and carbon footprint. The score factors in workload and hardware efficiency, resource utilisation, virtualisation rate, and power source. You can read more about this topic in the VMware Green Score in Aria Operations blog and Configuring Green Score to Track Sustainability documentation.

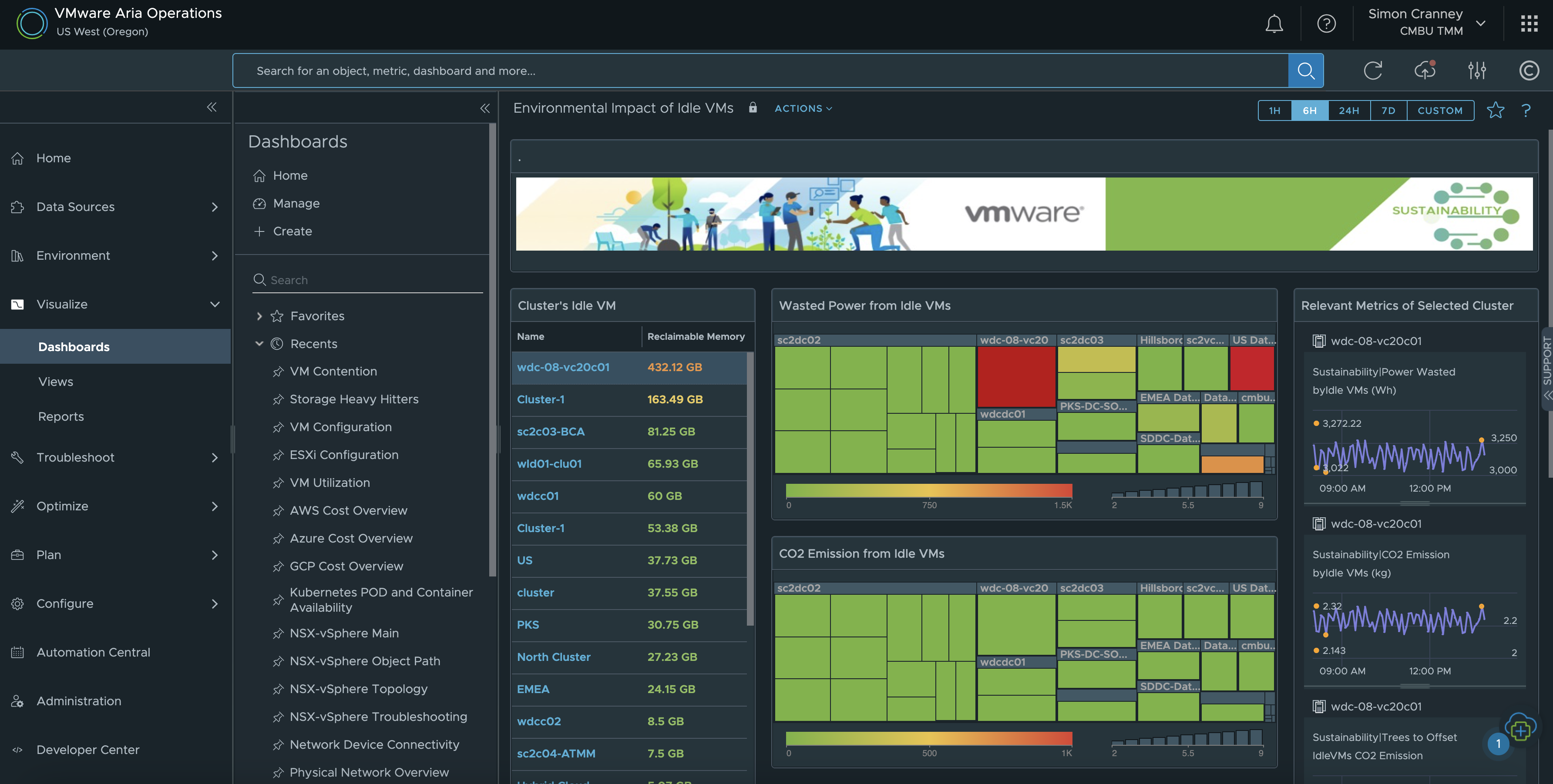

The built-in Aria Operations Sustainability Dashboards provide visibility into sustainability optimisation and emissions reduced by using VMware technologies. The available dashboards at the time of writing include: carbon transparency, carbon efficiency with virtualisation, environmental impact of idle VMs, and green supply. This is complimentary to existing features that already help customers manage their energy consumption such as workload right-sizing, resource reclamation and capacity management.

The sustainability features in Aria Operations are discussed in detail in the Improve Cloud Optimisation and Sustainability Stance with VMware Aria Operations session at VMware Explore. In this session, the internal VMware IT team (VMware on VMware) talk about how they operationalise the green score and recommendations within VMware’s own IT infrastructure.

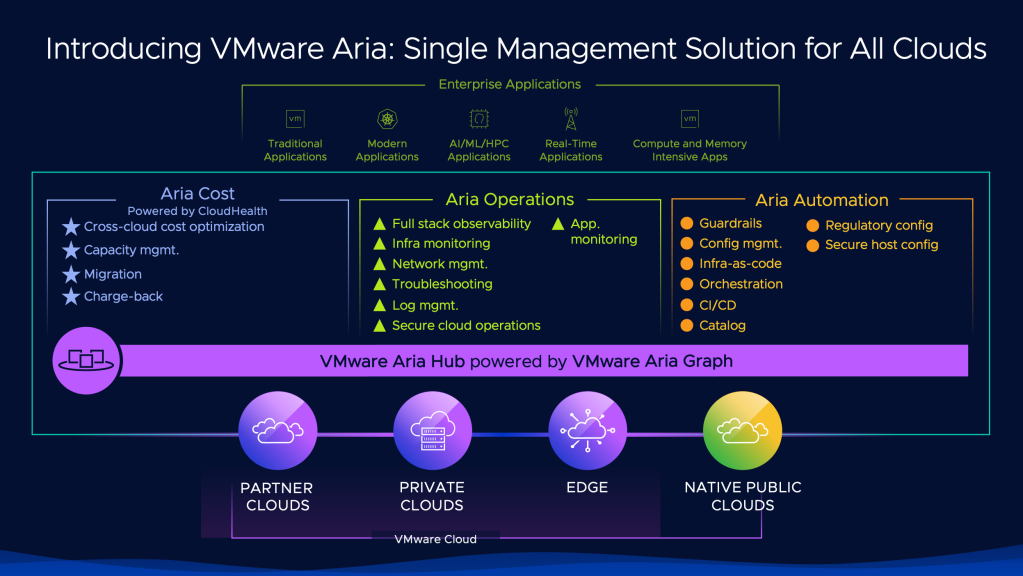

With Aria Cloud Suite, available as Software as a Service (SaaS), customers can benefit from Aria Automation as well as Aria Operations. Additional efficiencies from automation and on-demand infrastructure can be gained with Aria Automation (formerly vRealize Automation). Automating the deployment of infrastructure services allows for standardisation and desired state configuration, as well as just-in-time provisioning. Environments such as test and development can be spun up and torn down easily, providing infrastructure efficiencies especially when paired with cloud services.

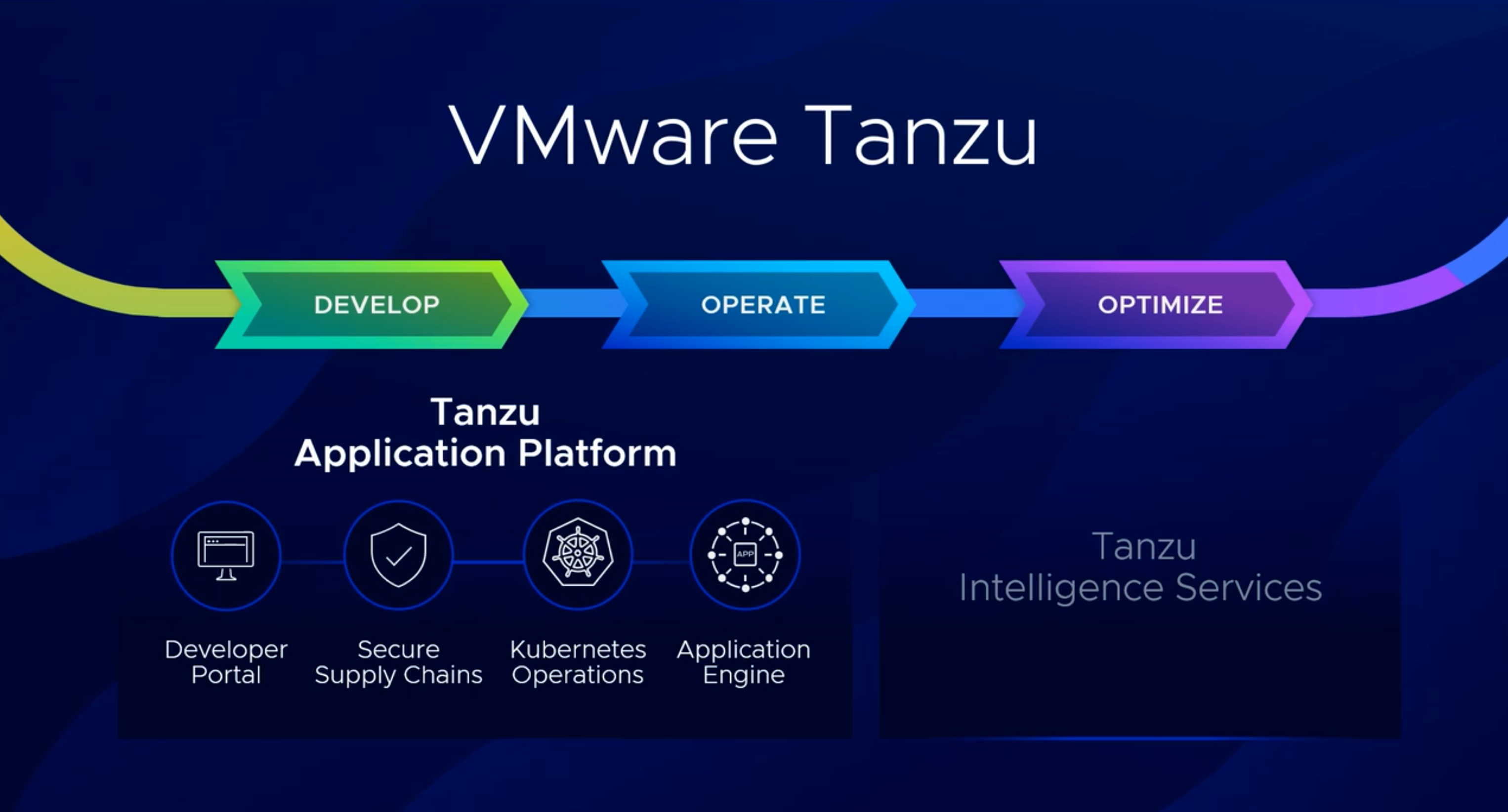

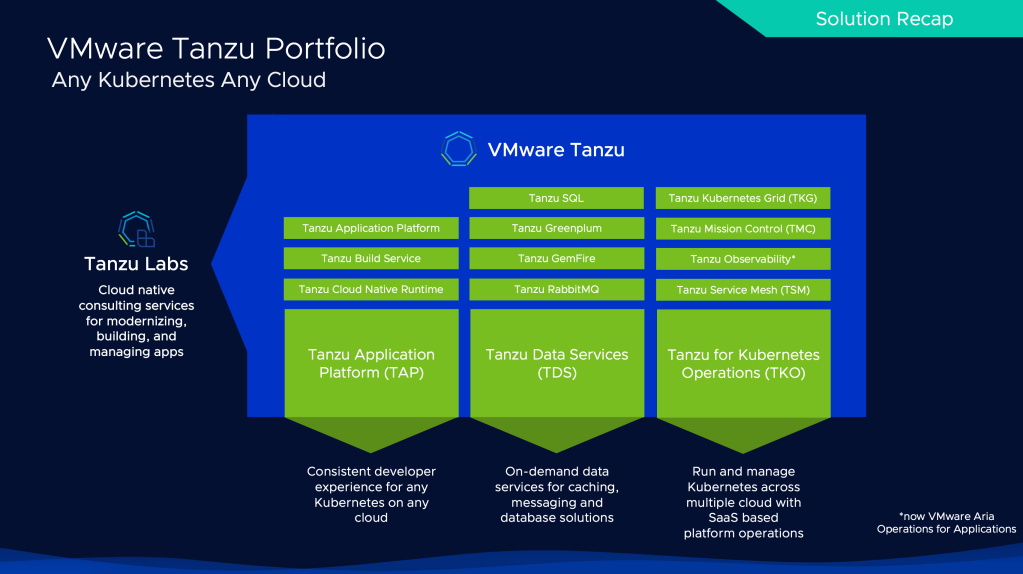

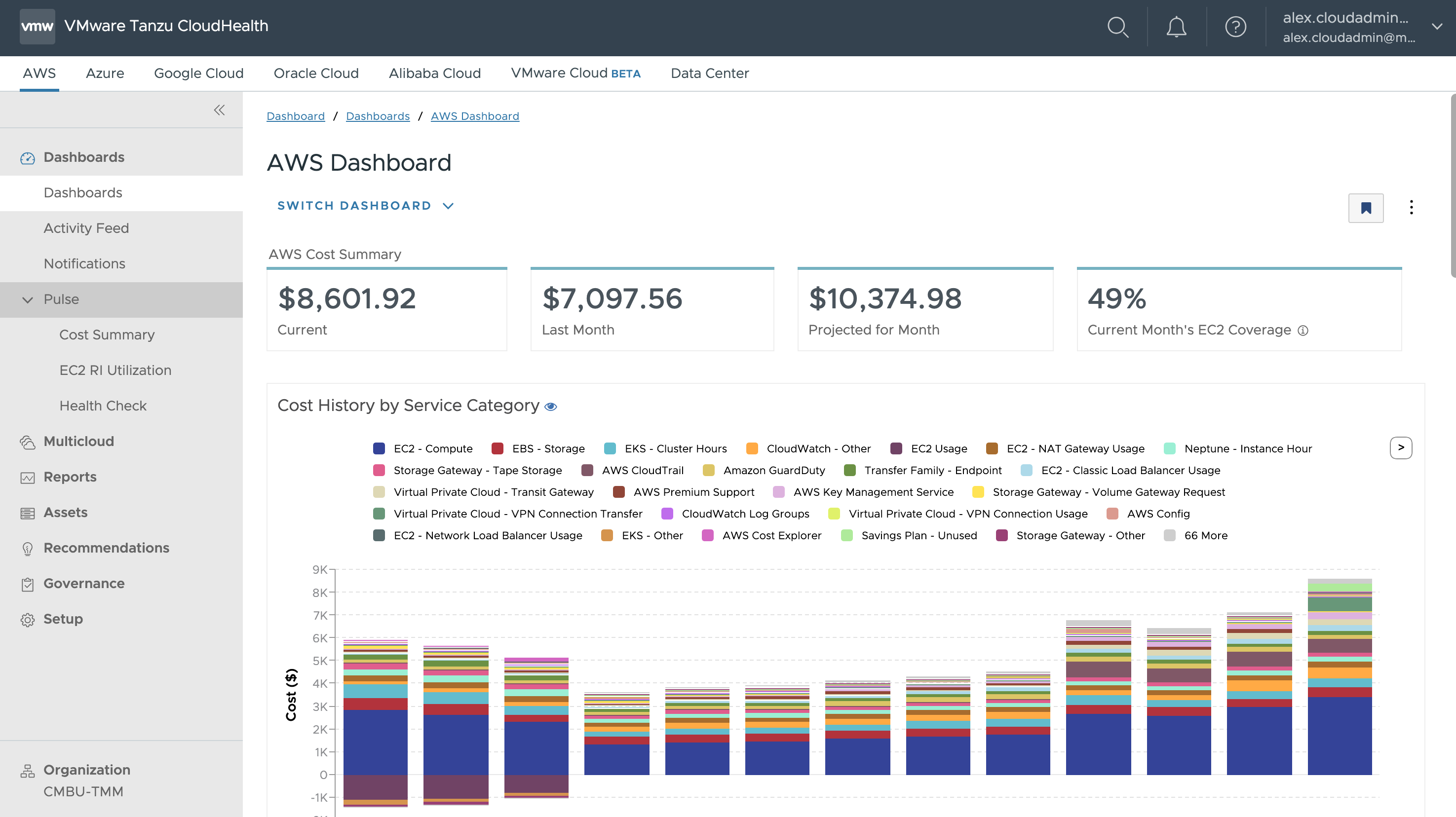

Public cloud users can optimise resources and spend with VMware Tanzu Cloud Health. Sustainability reporting for multi-cloud is now in private beta.

Cloud Health provides actionable insights and recommendations to optimise cost and governance in public cloud. It is aligned with FinOps practices, but has more recently integrated GreenOps too. GreenOps starts with visibility, taking a baseline of the current multi-cloud operational emissions, in order to identify optimisation and remediation opportunities. Next, and much like FinOps, the right teams need to be onboard to take action along with the right reporting and governance structure.

The GreenOps feature in Cloud Health is currently in private beta. It includes a multi-cloud dashboard and reports with carbon emissions, power consumption and equivalencies, for all compute instances and regions for the 3 major cloud providers. You can learn more about Cloud Health for FinOps and GreenOps practices in the VMware Explore session recording for From FinOps to GreenOps – Public Cloud Sustainability and Compliance.

Extend compute virtualisation benefits to the Software Defined Data Centre (SDDC) with VMware Cloud Foundation (VCF).

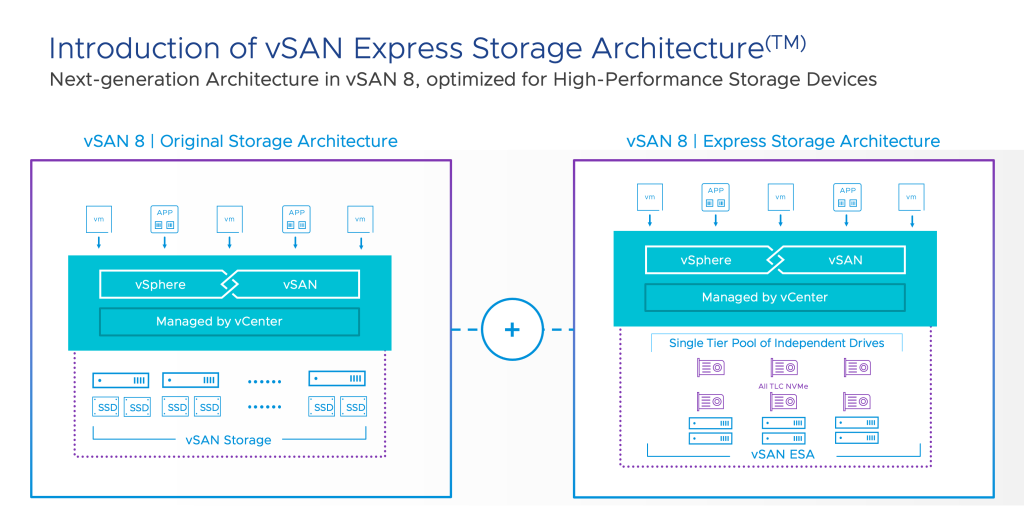

The benefits of compute virtualisation with vSphere are well documented, but they also extend out to storage virtualisation with vSAN and network virtualisation with NSX. These components bundled together are known as VMware Cloud Foundation (VCF). VCF provides a consistent operational building block for a Software Defined Data Centre (SDDC) in the data centre, private cloud or public cloud, and at the edge. The consolidation of hardware into Hyper-Converged Infrastructure (HCI) has an immediate impact on carbon footprint, reducing data centre racks, power consumption, cooling, and removing the need for external storage arrays. Beyond switching and routing, networking capabilities like load balancing, firewalls, and Intrusion Detection and Prevention Systems (IDPS) that previously required dedicated hardware and data centre space can now be run in software.

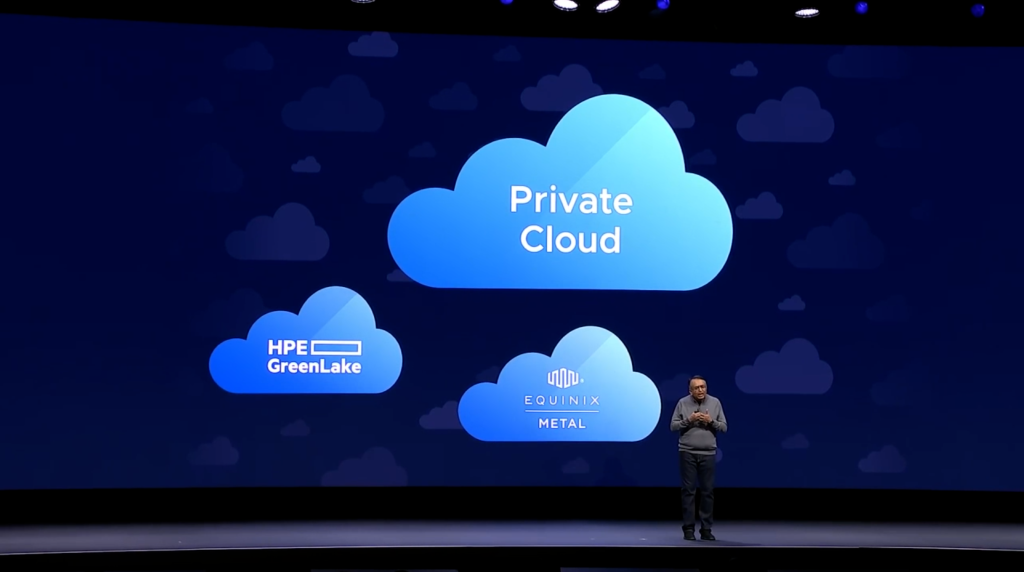

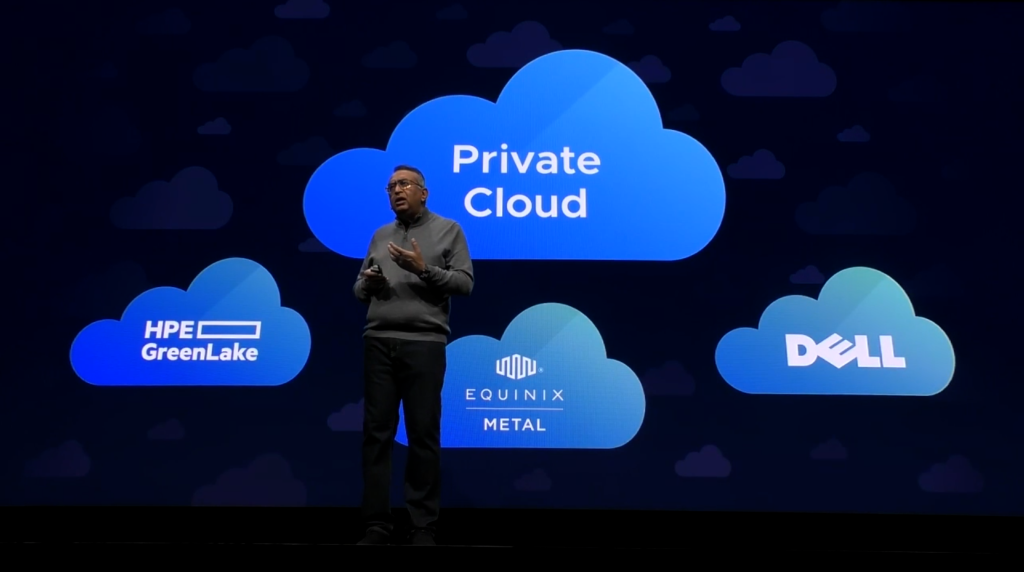

Hosting providers are becoming more competitive in their electricity consumption. This is measured by the amount of data centre power consumption versus how much of the total Kwh is being used by the actual computing power of the data centre, known as the PUE (Power Usage Effectiveness). At a recent customer supplier day I learnt that currently IT accounts for around 2% of emissions globally, but this will rise to 10% by 2030. Every organisation is being tasked with digitalisation, at the same time as reducing their carbon emissions. It is absolutely vital to choose the right hosting partners, so with VCF customers have the flexibility to either self manage their infrastructure whilst selecting a data centre premises with a lower PUE, or consume their infrastructure as a managed service. At the time of writing there are 74 cloud providers approved as VMware Zero Carbon Committed Cloud Providers.

Migrating to public cloud unlocks world class optimised data centres with lower energy usage, increasing migration speed and reducing risk and cost with VMware.

All the benefits of VCF on-premises can also be delivered off-premises, in renewable energy powered cloud data centres. The hosts used by solutions like VMware Cloud on AWS typically allow for reduced hardware, with better consolidation ratios, and scale on-demand capabilities. Data centres run by hyperscalers are optimised and utilised to a higher scale than could ever be achieved by an individual organisation; the 3 major cloud providers have PUE ratios of between 1:1 and 1:3, by contrast the local data centre average in Europe is 1:6.

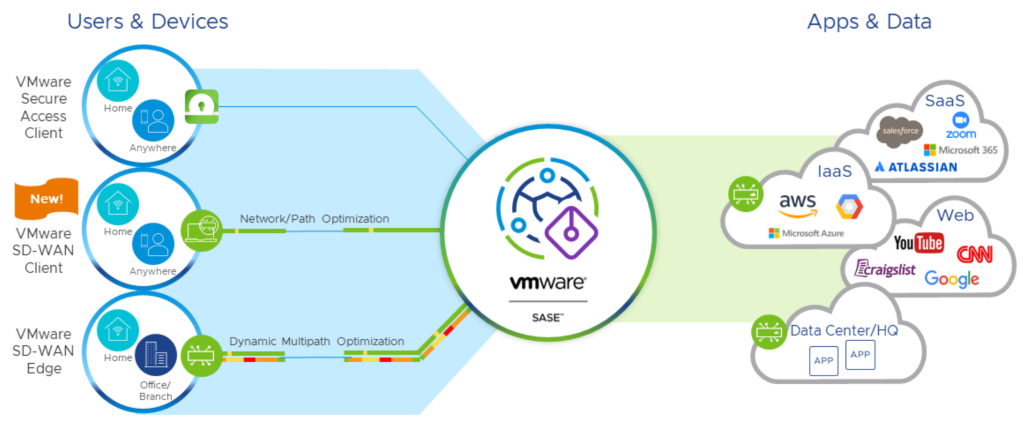

Many organisations will still retain an on-premises or hybrid footprint, whilst migrating to a combination of SaaS and public cloud services. Public cloud can help replace unnecessary energy consumption, such as always on dedicated storage arrays for archive data, with cold or on-demand infrastructure. In conjunction with cloud computing, further opportunities are presented for consolidating and optimising network infrastructure with SDWAN.

With VMware Cloud Disaster Recovery, customers can deploy copies of production environments on-demand for a true Disaster Recovery as a Service (DRaaS) model.

Traditionally, organisations have had to double up on IT infrastructure to provide Disaster Recovery (DR) capabilities essential for business continuity. This model presents obvious drawbacks in the doubling of cost, hardware, power consumption, operational support, maintenance, and a big impact on sustainability initiatives. VMware Cloud Disaster Recovery (VCDR) enables a DRaaS model that can potentially remove or reduce the need for a complete secondary infrastructure stack. VCDR replicates immutable copies of workloads to a cloud based scale-out file system. In the event of an outage or ransomware attack, VCDR mounts the file system to on-demand infrastructure using VMware Cloud on AWS. This approach significantly reduces the overhead of DR cost and carbon footprint.

- Reduce travel and transport:

- Digitally enabled care models and channels for citizens that will significantly reduce travel and journeys to physical healthcare locations, with care closer to home being delivered through remote consultations and monitoring.

- Approximately 3.5% (9.5 billion miles) of all road travel in England relates to patients, visitors, staff and suppliers to the NHS, contributing around 14% of the system’s total emissions.

The solutions mentioned above provide the underlying resilient and secure foundation for digitally enabled services. Promoting self-service through modern, Internet facing applications, along with Integrated Care Board and Partnership collaborations, can also help improve accessibility and further reduce overall carbon footprint.

Take advantage of a distributed workforce and offer users both flexibility and consistency with VMware’s End User Computing (EUC) solutions.

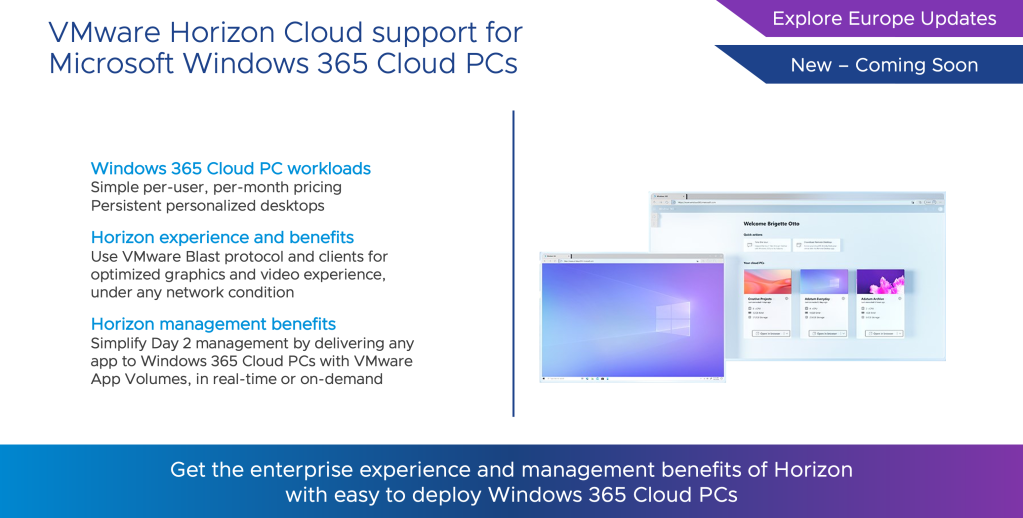

Remote work has a number of immediate benefits on carbon emissions across all industries. The obvious ones include less commuter travel and optimising the way organisations use their buildings. Where there are customer, or in this example patient, facing activities the commute of the patients and visitors is also removed. In healthcare published desktops and applications with VMware Horizon and Workspace ONE enable use cases such as telemedicine and teleradiology, to reduce waiting times and access a wider talent pool of remote clinicians.

It’s not just about remote and distributed workforces either. Carbon footprint for those with fixed locations can still benefit from Virtual Desktop Infrastructure (VDI) delivery by utilising low power consuming thin client devices over thick clients. Features like multi-session desktops enable consolidation of compute power for multiple people accessing the same applications. In many cases, a full desktop instance isn’t needed and applications can be published directly to the relevant role.

The transition of applications and services to edge locations can be enhanced with SDWAN and Secure Access Service Edge (SASE). In healthcare these technologies support examples like virtual wards and community or popup services, reducing the carbon impact of delivering acute care.